About Me

I am a Ph.D. student at Virginia Tech, Blacksburg, where I am affiliated with Sanghani Center for Artificial Intelligence and Data Analytics. I am working on making generative models more controllable and customizable to further enhance control over them. Before joining Virginia Tech, I obtained my M.Sc. & B.Sc. degree from Deprtament of Computer Engineering at Bilkent University (M.Sc. Thesis).

At Virginia Tech, I am currently working on the controllability aspect of generative models under the supervison of Pinar Yanardag. In the past, I have been fortunate to work with Aysegul Dundar at Bilkent University, Yijun Li at Adobe Research, and Kfir Aberman and Kuan-Chieh Jackson Wang at Snap Research.

Research Interests

- Multimodal controllable generative models

- Image and video generation and editing

- Customization of generative models

- Representations learned by generative models

Recent Research

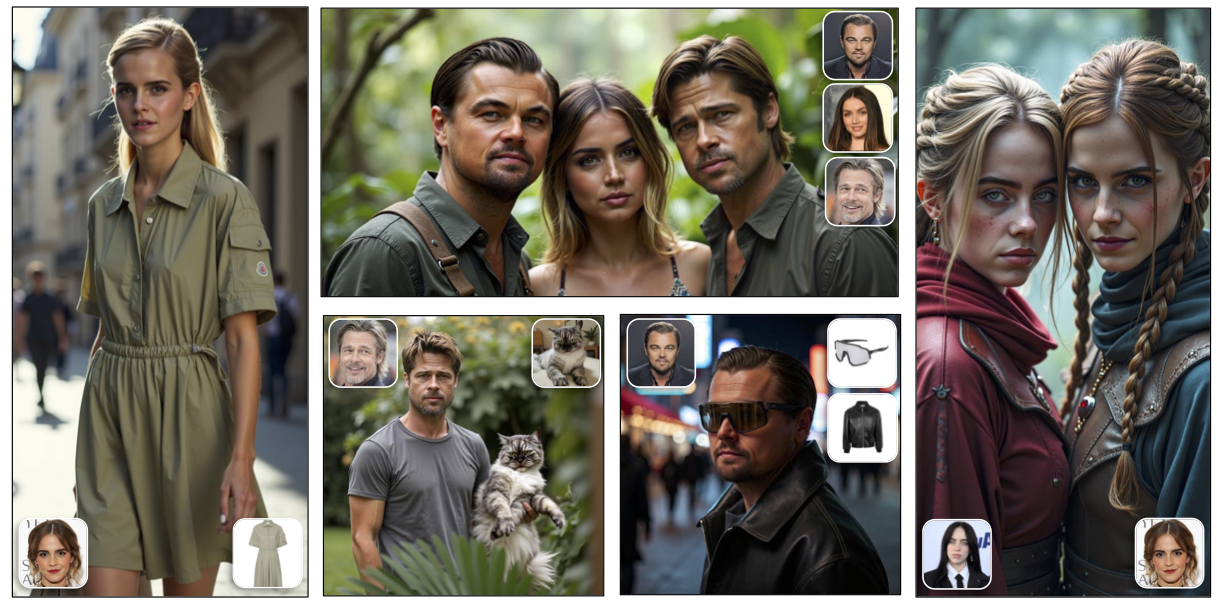

LoRAShop: Training-Free Multi-Concept Image Generation and Editing with Rectified Flow Transformers

Yusuf Dalva, Hidir Yesiltepe, Pinar Yanardag

NeurIPS 2025 (Spotlight - top 3%)

FluxSpace: Disentangled Semantic Editing in Rectified Flow Transformers

Yusuf Dalva, Kavana Venkatesh, Pinar Yanardag

CVPR 2025

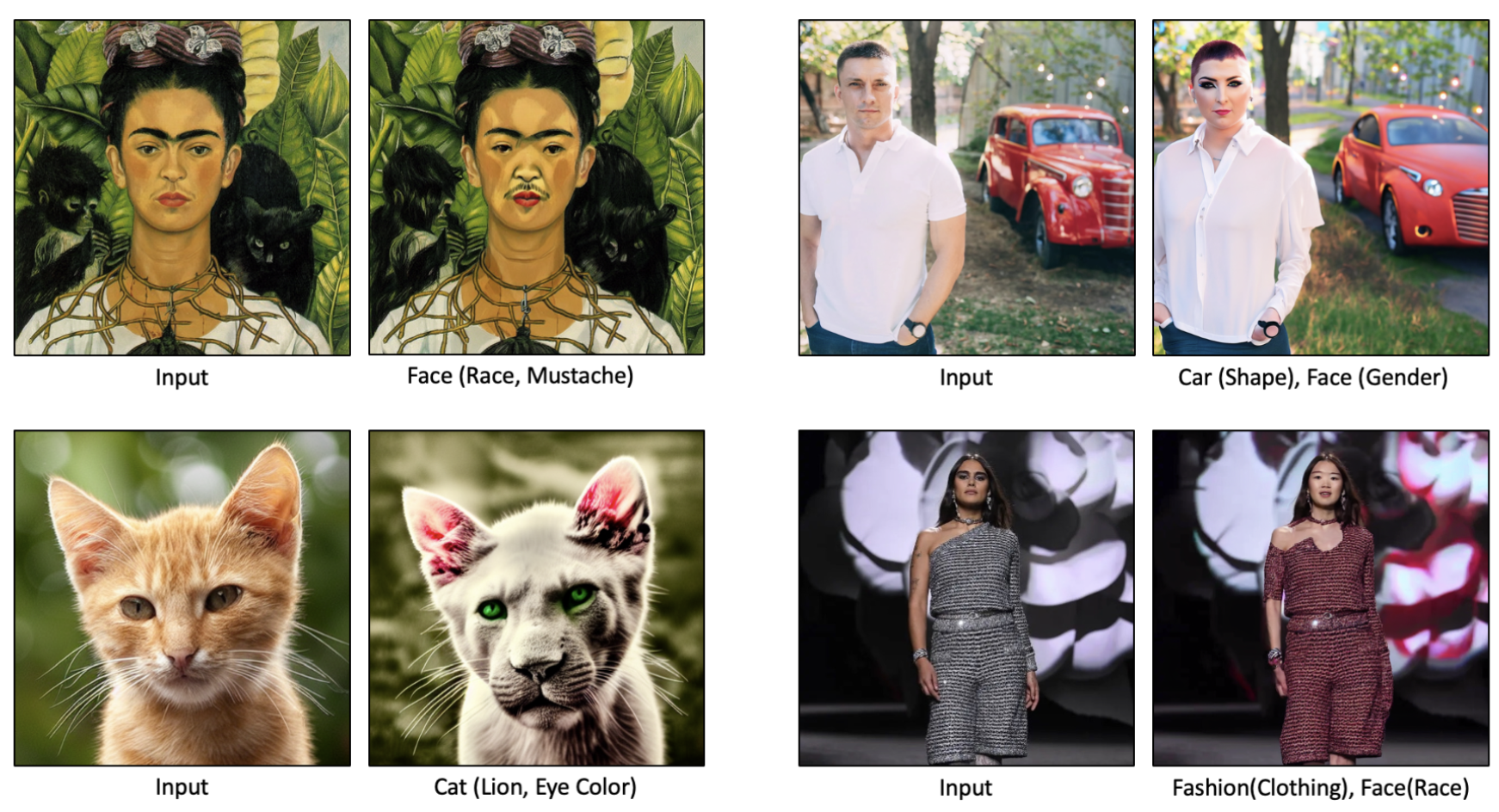

NoiseCLR: A Contrastive Learning Approach for Unsupervised Discovery of Interpretable Directions in Diffusion Models

Yusuf Dalva, Pinar Yanardag

CVPR 2024 (Oral - top 0.8%)

For the full list of publications, visit the Publications Page and my Google Scholar profile.

News & Updates

September 2025: LoRAShop got accepted to NeurIPS 2025 as a spotlight!

February 2025: FluxSpace got accepted to CVPR 2025!

December 2024: FluxSpace and Context Canvas now available at arXiv.

May 2024: Joined Adobe Research as a Research Scientist Intern.

May 2024: GANTASTIC now available at arXiv.

February 2024: NoiseCLR got accepted to CVPR 2024 for an oral presentation.

September 2023: "Image-to-Image Translation For Face Attribute Editing With Disentangled Latent Directions" won Best Master Thesis Award from IEEE CS Turkey Chapter.

August 2023: Started my Ph.D. at Virginia Tech.